AI is Learning to Read Your Mind (this is not a joke)

The ability to translate thoughts directly into textual description has long been a foundational trope of science fiction. However, what was once fantasy is rapidly becoming a reality.

The same Artificial Intelligence algorithms that write our emails, generate pictures, and give us recipes are now being trained to eavesdrop on the quiet murmur of our neurons. Bit by bit, the boundary between language and thought is dissolving. In research laboratories from Austin to Kyoto, scientists are teaching machines to translate brainwaves into words, to turn the flicker of a memory, the shape of an image, or the feeling of a sentence into text. This is not sci-fi anymore. Once the code of thought is cracked, will the world remain the same?

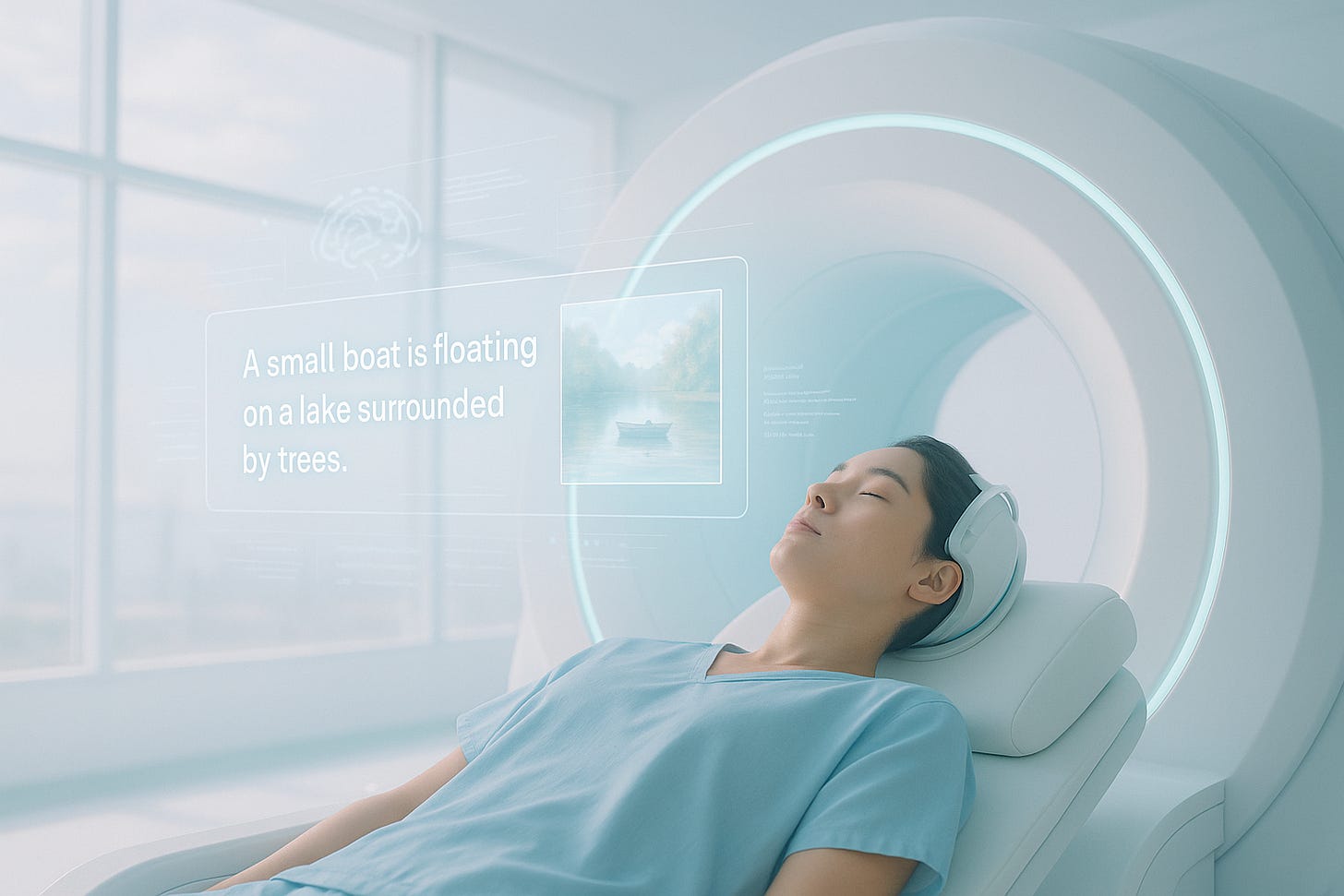

We are looking into a future where AI and neuroscience make the mind readable. AI algorithms use imaging patterns to learn to link brain activity to meaning.

A new paradigm

At the forefront of this revolution is a groundbreaking method that generates rich, descriptive text mirroring the semantic content of brain activity. Detailed in a recent scientific publication by Tomoyasu Horikawa (*) from the Communication Science laboratories in Kanagawa, Japan, this mind-captioning approach represents a massive leap forward, moving beyond simple word prediction because it is evolving into generating complex sentences from the brain’s internal representations of the external world (#here). The methodology is elegant in its two-stage design. First, Horikawa uses brain activity recorded by functional Magnetic Resonance Imaging (fMRI) while a person watches video clips. A method guided by a deep language model decodes the fMRI signals into a set of semantic features. This is the core component of meaning. The magic happens in the second stage, when instead of simply matching these features to a pre-set database, the system initiates an optimization process that works like an expert sculptor starting with a block of clay. The process begins with any meaningless word and, guided by the brain data, repeatedly swaps and refines words, chiseling away until a sentence emerges that perfectly matches the semantic “shape” of the thought. This is a so-called generative process that ensures that the final description is rooted in the brain’s unique representation of an experience, rather than just a pre-written caption. The significance of Horikawa’s work is twofold. First, the text that is generated captures structured information so that the word order and relationships are meaningful. In one experiment, simply shuffling the words in the generated captions significantly reduced their accuracy, proving that the method decodes not just what the brain is seeing, but how it structures that information. Second, the method can generate detailed descriptions without relying on the brain’s canonical language network. This is a critical discovery because it implies that the technique is decoding non-verbal thought (technically speaking, the brain’s raw semantic representation of a visual experience) before it can be formulated into descriptive language.

The global race to decode the brain

The quest to decode the brain is a rapidly accelerating global effort, with multiple research teams tackling the problem from different angles. The first major piece of the puzzle, decoding narrative thought, was put in place by researchers at the University of Texas at Austin. Jerry Tang, Alexander Huth and their colleagues used a generative AI model, an early version of OpenAI’s ChatGPT, to reconstruct flowing language from fMRI data. Their system was trained on many hours of many people listening to stories, allowing the algorithm to learn the patterns linking brain activity to actual meaning. The result was a system that could decode not just the essence of the stories, but also their content. Is this mind reading? Maybe. But Alexander Huth is careful to manage expectations, preferring the term brain decoding. Whether this distinction is significant or pure semantics may rest on the definition of “decoding” and “reading”. How do the two studies relate? Huth’s team focused on reconstructing the gist of continuous speech, whereas Horikawa tackled the possibly more profound challenge of evolving rich descriptions directly from the brain’s non-verbal representation of the world. Other teams are engineering more powerful and efficient ways to bridge the gap between brain and language. A framework called MindSemantix represents a key leap in integration. It is like building a direct translator that allows an AI algorithm, which is already fluent in human language, to learn the dialect of an individual’s brain activity. In the process, it allows AI to directly understand the meaning of what people are seeing simply by analyzing their brain activity. Most recently, the frontier has moved from passive decoding to active interaction. A framework called BrainChat achieves the first-ever “fMRI question answering.” It shifts the technology from simply describing what a person sees, into essentially asking the brain specific questions about what the person is seeing or thinking. This is transformative because it opens the door to interactive decoding. The common thread weaving through these diverse projects is the powerful synergy between advanced AI and non-invasive brain imaging. This fusion of technologies is creating tools with capabilities we are only just beginning to understand.

The dual faces of brain decoding

The most profound and benevolent application of this technology is the potential to restore communication for people who have lost the ability to speak but whose minds remain active and aware. This could be a life-altering technology for people with “Locked-in” syndrome, who are fully conscious but unable to move or speak. Others that will benefit are people that have survived a stroke but have suffered damage in their speech-producing abilities. Also, patients with conditions like aphasia or other language expression difficulties. The systems can help by tapping into non-verbal thought, providing a completely alternative communication way. Naturally, such technologies understandably raise deep societal fears of mind reading big-brother machines that will start to erode our most private treasure, our thoughts. Could it be used to extract a confession or expose our secrets? They might. Therefore, researchers are building safeguards despite the fact that currently the tech. is far from achieving mind-reading capacity. For example, the Texas study reveals several critical limitations that act as safeguards. First, a brain decoder must be trained extensively on a single individual’s brain. A model trained on one person’s fMRI data performs barely above chance when trying to decode another person’s brain activity. This means a person must volunteer for many hours of training in an fMRI machine for a decoder to work on them. Second, and very critically, a person can easily consciously thwart the decoder, because in the experiments people were able to significantly lower decoding performance by actively thinking about other things, or simply by mentally lying about what they were seeing. These safeguards, however, may not last forever. Therefore, it is important not to get a false sense of security and think that things will be this way forever.

The future is even weirder

Horikawa’s success in captioning the mind is impressive. But it is not an isolated event because around the globe teams were racing toward the same frontier. These parallel efforts represent a form of convergent evolution, with researchers collectively solving the same grand puzzle by looking at the different pieces. Together, they paint a picture of a technological revolution in the making, moving from reconstructing narrative thought, to caption non-verbal concepts, to build integrated pipelines, and finally to enable an interactive dialogue with the brain. The trajectory of this research is startling. We are moving rapidly from decoding single words to reconstructing the gist of a story, and now, to evolving full descriptions of thoughts and recalled memories. Projecting this trend forward into a future where the technology is mature and portable and seamlessly integrated into powerful AI algorithms of general intelligence, we are forced to contemplate applications that border on the metaphysical. Imagine a virtual collective brain in the cloud using the thoughts of every member of a team of architects and engineers designing a complex structure. Not by arguing over blueprints, but by directly sharing their mental models. What emerges might not just be a new tool, but a new form of collective consciousness.

Importantly, like any revolutionary tool, from the printing press to the internet, brain decoding presents both incredible promise and profound ethical challenges for humanity. It undeniably demands our attention because its potential to heal the broken mind runs parallel to its potential to erode the last bastion of personal privacy… our inner thoughts.