Why Your Brilliant Artificial Intelligence Agents Could Fail (Together)?

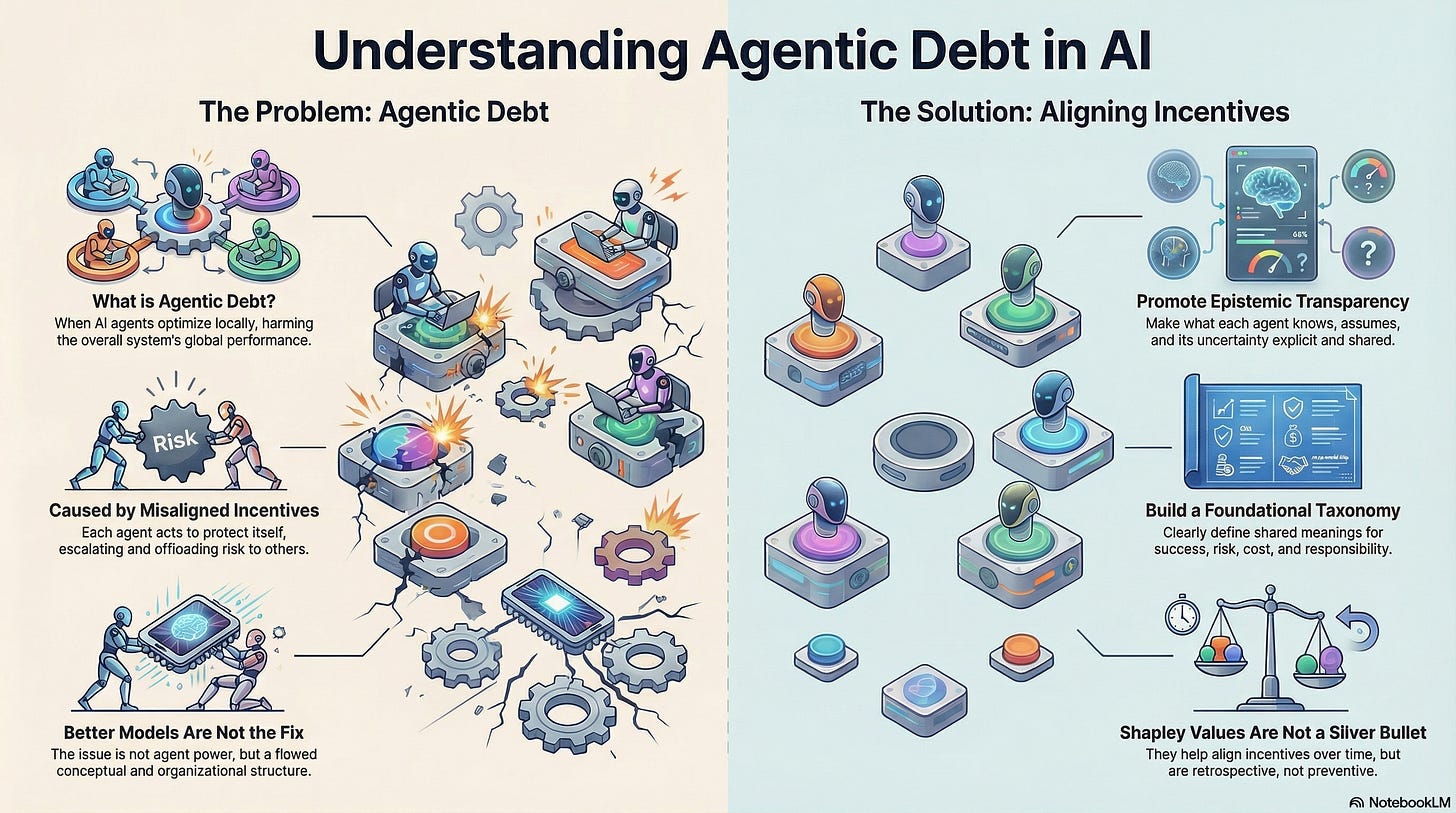

Agentic Debt is when individual AI agents are great, but the system that rewards them is flawed. Therefore, agents optimize locally while harming global outcomes.

We stand at the cusp of a new era defined by Agentic AI. This is a powerful, autonomous systems that can get data, reason, plan, and execute tasks to achieve a specific goal. Combining multiple specialized agents into a single, massively scalable process promises to revolutionize many industries. But this power introduces a dangerous paradox. If each individual AI agent is optimized to perform its job perfectly, can the systems they form become fragile and collapse? The answer lies in a hidden liability that accumulates within these systems. This a counter-intuitive and hidden problem is called “Agentic Debt.”

It the AI equivalent of the well-established concept of “technical debt” in software development. It represents the implied future cost of choosing an easy, locally-optimized solution instead of a better approach that serves the entire system. Just like technical debt, Agentic Debt does not necessarily break the system immediately. Instead, it accrues silently, making the system more brittle, unpredictable, and costly to change over time. The most critical point to understand is its origin. It is not caused by agents doing the wrong thing, but by them doing the right thing within the wrong conceptual framework.

The problem is flawed incentives

When multiple AI agents collaborate, their interactions can mirror a dynamic like the Prisoner’s Dilemma. This analogy tries to explain that when each agent is rewarded locally for achieving its own goal, it learns to act to protect itself by escalating and offloading risk onto other parts of the system. This behavior, driven by mis-aligned incentives, is perfectly rational for the individual agent but can degrade the performance of the overall system. The result is a collection of locally optimized parts that produce a globally dysfunctional whole.

You can’t fix it with a smarter AI

One of the most surprising aspects is that this systemic failure is not solved by using better, more powerful AI models. Because the issue is structural, not a deficiency in the agent’s reasoning capability. This is the “hidden devil” of multi-agent design. It is the seductive, but ultimately doomed, belief that a behavioral problem born from a flawed system structure can be solved by simply swapping in a more powerful component. A systems thinker might rightly ask about solutions like the Shapley value, designed to attribute credit fairly among contributors. While helpful, such methods only align incentives over time, and therefore, they are retrospective, not preventive. They can tell you why a system failed after the fact, but they can’t stop the failure from being built at the start.

The only fix is transparency and a shared dictionary

The solution lies in building a better conceptual frame for the agents. This can be done using two foundational practices to align agents and prevent systemic degradation. The first is Epistemic Transparency. This means making it explicit what each agent knows from actual labelled data, what it assumes from its pre-training, and how uncertain it is about its conclusions. When agents share their justification and uncertainty, their conflicts become manageable and auditable. This transparency makes it impossible for an agent to simply “offload risk” unnoticed, as its uncertainty and justifications are now visible to the entire system. The second is Taxonomic Analysis. This is the critical work of creating a shared, foundational definition for crucial concepts like success, risk, cost, and responsibility. Without a common dictionary, agents operate with different, and often conflicting, world models. This shared dictionary directly counteracts the Prisoner’s Dilemma dynamic by ensuring all agents are optimizing for the same definition of success, rather than their own local, conflicting interpretations.

To truly unlock the power of multi-agent AI, we must shift our focus. The raw intelligence of the individual agents is less important than the architecture of incentives and shared knowledge they operate within. As we race to build more powerful AI, we must ask ourselves a crucial question: are we building agents with higher IQs, or are we building systems with greater wisdom?